How AI Is Amplifying (Not Replacing) QA Testing

Every QA tester knows that routine – the ritual of checks and rechecks before showtime. But in today’s AI-driven world, a new echo fills the booth: Who’s really holding the mic now – the human tester or the machine beside them?

The truth is this isn’t about replacement; it’s about partnership. In QA testing, repetition and precision are everything, and AI is amplifying those strengths – not replacing the people behind them. Think of AI as the ultimate sound engineer: fine-tuning our work, reducing repetitive noise, and ensuring nothing gets lost in the mix.

While hype suggests AI will replace testers, the reality is that QA depends on human context – something AI can’t replicate. But here’s the reality: software development is messy, nuanced, and very human. Bugs don’t just live in code syntax – they live in assumptions, business rules, user behavior, and weird edge cases nobody thought of until a real customer stumbled across them.

AI can’t fully understand context the way a seasoned QA tester can. For example, when testing a healthcare application, a bug in how medical data is displayed might not be “wrong” from a purely technical perspective, but it could be dangerous or misleading for doctors and patients. That judgment call – the ability to weigh compliance, safety, and human experience – comes from people, not algorithms.

The Reality: AI as a Sidekick

So, what does AI bring to the QA table? Think of it as a tireless assistant who doesn’t complain about the boring stuff. AI is great at:

So, what does AI bring to the QA table? Think of it as a tireless assistant who doesn’t complain about the boring stuff. AI is great at:

• Repetitive execution: Running the same test suite hundreds of times with slightly different variables.

• Pattern recognition: Spotting anomalies in logs or performance metrics faster than the human eye.

• Prediction: Suggesting where defects are most likely to appear based on historical data.

Here are a few scenarios where AI and testers can work side by side:

Scenario 1: Visual Accuracy Across Devices

A QA tester working on an e-commerce website needs to confirm that product pages look consistent across dozens of browsers and devices. Instead of manually flipping through each one, AI can scan screenshots to highlight potential layout or design issues. The tester then decides which differences impact usability versus which are just cosmetic or irrelevant.

AI handles the visual grunt work – testers make the final call on what truly affects the user experience.

Scenario 2: Generating Smarter Regression Tests

Imagine a financial application where users frequently move between account summaries, transfers, and bill payments. By observing these common user paths, AI can automatically suggest regression tests for those workflows. The QA tester reviews and adjusts them, ensuring the tests align with business rules and edge cases AI might miss.

AI can map out common user journeys and draft regression tests – but testers ensure every step aligns with real business logic and user expectations.

Scenario 3: Prioritizing Risky Areas

In a large enterprise system, hundreds of code files might change in a single sprint. Instead of running every test against every update, AI can analyze which parts of the application historically fail most often or are most sensitive to changes. The tester uses this insight to prioritize critical areas, while still performing exploratory testing elsewhere.

AI pinpoints the riskiest areas of change so testers can focus their time where it matters most.

Scenario 4: Log and Data Analysis

When performance issues pop up under heavy load, the sheer amount of data can overwhelm a manual review. AI can sift through logs, detect unusual spikes, and flag where bottlenecks are most likely. A tester then investigates the flagged areas, recreates conditions, and validates the true root cause.

AI surfaces performance red flags fast – testers use their expertise to uncover what’s really causing them.

A Day in My Life with and without AI

Before AI, my testing workflow looked very different. I had to manually write test cases for every new feature or change, carefully outlining each step, data input, and expected result. I also had to manually write bug tickets whenever I found issues, documenting steps to reproduce, sharing actual versus expected behavior, and attaching screenshots.

Before AI, my testing workflow looked very different. I had to manually write test cases for every new feature or change, carefully outlining each step, data input, and expected result. I also had to manually write bug tickets whenever I found issues, documenting steps to reproduce, sharing actual versus expected behavior, and attaching screenshots.

Regression testing took much longer, because every element had to be checked by hand, and sometimes that left little time for exploratory testing – the creative side of QA where real insight and hidden bugs often appear. With AI, the game has completely changed. I can now generate test cases in seconds instead of hours and run pre-deploy regression checks with a single click. AI helps me prioritize high-risk areas or parts of the application where issues are most likely to appear, letting me focus my attention where it matters most.

Most importantly, I can finally dedicate more time to exploratory testing, where human intuition and creativity make all the difference. AI doesn’t replace my role—it enhances it, handling the repetitive work so I can concentrate on uncovering deeper issues and improving overall product quality.

Speed Without Sacrificing Quality

One of the biggest frustrations in QA is the tradeoff between speed and thoroughness. Agile and DevOps have shrunk release cycles from months to days. That’s a lot of pressure to “test everything” in less time.

AI bridges that gap. By automating low-level execution and surfacing the most critical risks, QA testers can focus on high-value exploratory testing – digging into the unexpected behaviors and “what if” scenarios that AI can’t predict.

Think of it this way: AI clears the highway of routine traffic, so testers can spend their energy navigating the complex city streets where real accidents are more likely to happen.

Why Testers Still Matter

If AI is so fast, why not just let it handle QA end to end? Because quality isn’t just about finding bugs – it’s about asking the right questions:

If AI is so fast, why not just let it handle QA end to end? Because quality isn’t just about finding bugs – it’s about asking the right questions:

• Does this solve a customer problem?

• Is the workflow intuitive?

• What happens if a user takes action we never expected?

• Is this app compliant and accessible?

AI can’t empathize with users or sense when a design “just feels off.” That’s what testers bring to the table. In fact, AI makes testers even more critical – freeing them from the grind so they can think deeper about quality.

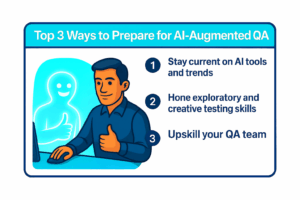

The Future: Humans and AI in Harmony

As AI matures, it will get better at handling more routine testing – but that doesn’t diminish testers. It elevates them. The testers who thrive will be those who know how to work with AI – treating it as a tool in their belt rather than a threat to their job.

As AI matures, it will get better at handling more routine testing – but that doesn’t diminish testers. It elevates them. The testers who thrive will be those who know how to work with AI – treating it as a tool in their belt rather than a threat to their job.

Think of a pilot and an autopilot in aviation. The autopilot handles the mechanics of flight, but nobody in their right mind would get on a plane without a human pilot in the cockpit. QA is no different.

Final Thoughts

The phrase “Testing…Testing…1, 2, 3?” is about making sure everything works before the show begins. With AI, QA testers aren’t losing the mic – they’re upgrading the sound system.

At RadixBay, we help QA teams harness AI tools that amplify precision, speed, and insight – while keeping human judgment at the heart of quality.

The show still needs us to go on.

Ready to see how AI can elevate your testing performance?

Let’s make it happen!. Drop me a message – I’d love to hear about your AI challenges and opportunities.

Bradley Cress

IT and software-testing writer with multiple AT*SQA micro-credentials, a Google IT Support Professional Certificate, and Cybersecurity Certified.